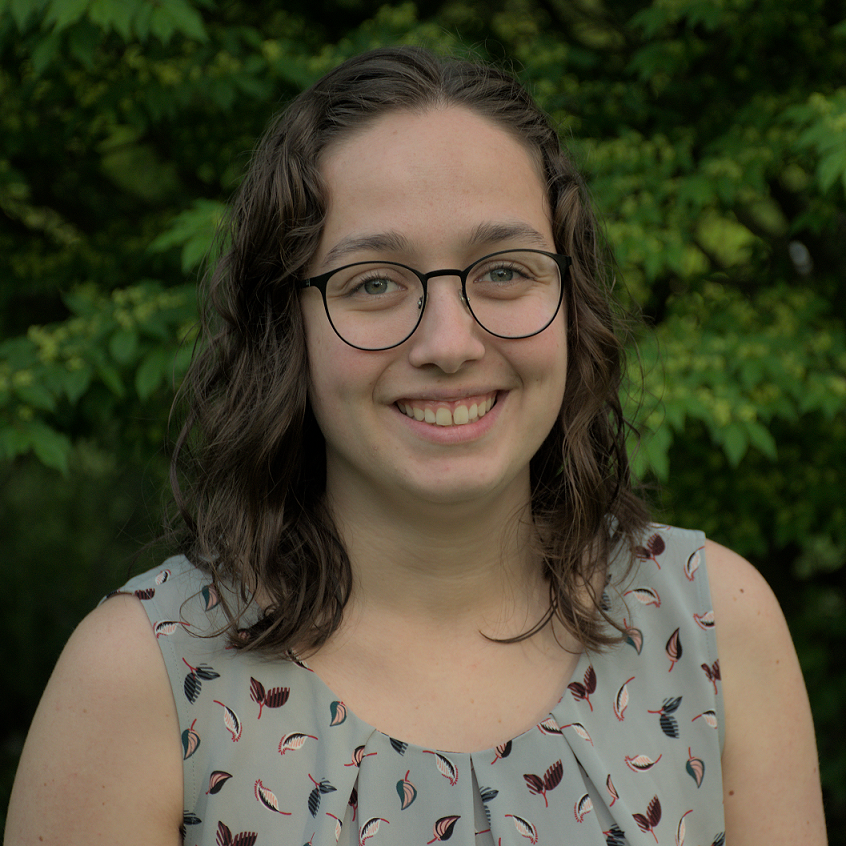

Nicole was selected from a extremely competitive application pool to become a 2022 Gatzert Child Welfare Fellow. This fellowship will support Nicole in her research and as she writes her dissertation contributing to the lives of children with disabilities. Way to go Nicole! ConGATZERTulation!

Nicole was selected from a extremely competitive application pool to become a 2022 Gatzert Child Welfare Fellow. This fellowship will support Nicole in her research and as she writes her dissertation contributing to the lives of children with disabilities. Way to go Nicole! ConGATZERTulation!

UbiquitousRehabilitation

Introducing Dr. Yamagami

Congratulations to Dr. Momona Yamagami on earning her Doctorate in Electrical and Computer Engineering! Dr. Yamagami’s PhD thesis dissertation was titled Modeling and enhancing human-machine interaction for accessibility and health. Congratulations and best of luck as you move forward as a CREATE Postdoctoral Researcher.

Congratulations to Dr. Momona Yamagami on earning her Doctorate in Electrical and Computer Engineering! Dr. Yamagami’s PhD thesis dissertation was titled Modeling and enhancing human-machine interaction for accessibility and health. Congratulations and best of luck as you move forward as a CREATE Postdoctoral Researcher.

Momona Yamagami wins the College of Engineering Student Research Award. Congratulations Momona!

The College of Engineering Awards acknowledges the extraordinary efforts of the college’s teaching and research assistants, staff, and faculty members. Momona Yamagami was selected for the 2021 Student Research Award. Congratulations Momona!

The College of Engineering Awards acknowledges the extraordinary efforts of the college’s teaching and research assistants, staff, and faculty members. Momona Yamagami was selected for the 2021 Student Research Award. Congratulations Momona!

Momona Yamagami is an innovative researcher who focuses on developing novel accessible technologies with translational impact. In her first year, she helped build an interdisciplinary research program that blended neuroengineering, human-computer interaction and rehabilitation at the Amplifying Motion and Performance (AMP) Lab to evaluate and mitigate symptoms of Parkinson’s disease using virtual reality. Dedicated to building accessible and inclusive technology, she is working to apply control theory and artificial intelligence to improve device accessibility for people with and without limited motion.

“Momona is a truly exceptional student with a demonstrated history of leadership in research and education. We cannot wait to see where Momona steers her career trajectory and research contributions.”

Reimagining Mobility

Microsoft has paired with UW to CREATE!

During Microsoft’s annual Ability Summit, they announced a new partnership with the University of Washington to establish the Center for Research and Education on Accessible Technology (CREATE) and kicked-off the collaboration with an inaugural investment of $2.5 million. CREATE is an interdisciplinary team whose mission is to make technology, and the world, more accessible.

During Microsoft’s annual Ability Summit, they announced a new partnership with the University of Washington to establish the Center for Research and Education on Accessible Technology (CREATE) and kicked-off the collaboration with an inaugural investment of $2.5 million. CREATE is an interdisciplinary team whose mission is to make technology, and the world, more accessible.

The CREATE leadership will be comprised of six campus departments and three different colleges including the Steele lab’s own Heather Feldner and Katherine M. Steele. This fantastic news was featured on The Seattle Times and Greek Wire.

Get excited and help us congratulate Heather, Kat, and all those involved and cheer them on to CREATE!